ML Kit makes it simple to apply ML strategies in your applications by bringing Google's ML innovations.

Regardless of whether you need the intensity of continuous abilities of Mobile Vision's on-gadget models or the adaptability of custom TensorFlow Lite models, ML Kit makes it conceivable with only a couple of lines of code.

here is github link, you can download the project from here also.

This article will walk you through straightforward strides to include Text Recognition, Language Identification, and Translation from constant camera feed into your current Android application. This post will likewise feature best practices around utilizing CameraX with ML Kit APIs.

In this post, you're going to implement an Android application with ML Kit. Your application will utilize the ML Kit Text Recognition on-device API to perceive text from the real-time camera feed. It'll utilize ML Kit Language Identification API to distinguish the language of the perceived content. Finally, your application will make an interpretation of this content to any picked language out of 59 choices, utilizing the ML Kit Translation API.

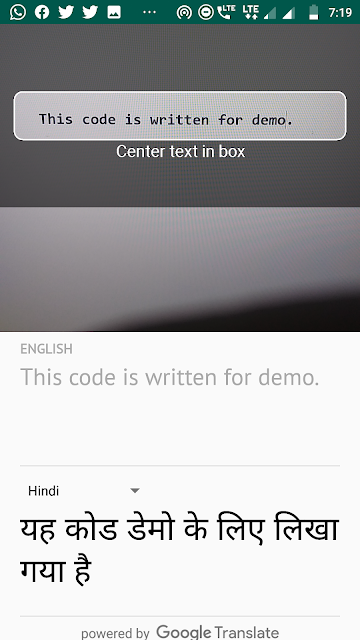

At long last, you should see something like the picture beneath.

Some Basic Requirement:-

- Android Studio v4.0+

- A device to run the application

- Sample Code

- As the code is written in Kotlin, so you should have a basic knowledge of Kotlin

How To Do Next:-

1- Download This Sample Code and import this in your android studio

2- Check for given dependencies in app/build.gradle

// Add CameraX dependencies play-services-mlkit-text-recognition:16.0.0' |

if already there do not edit them.

3- Now launch your app, you will see your app like below.

but keep in mind text recognition functionality has not been implemented yet.

4- Adding text recognition:-

Instantiate the ML Kit Text Detector

Now you have to replace the //TODO at the top of TextAnalyzer.kt to instantiate TextRecognition. This is how you get a handle to the text recognizer to use in later steps. We also need to add the detector as a lifecycle observer in order to properly close the detector when it's no longer needed.

TextAnalyzer.kt

private val detector = TextRecognition.getClient()

|

Run text recognition on an Input Image (created with buffer from the camera)

The CameraX library provides a stream of images from the camera ready for image analysis. Replace the recognizeText() method in the TextAnalyzer class to use ML Kit text recognition on each image frame.

TextAnalyzer.kt

|

private fun recognizeText( image: InputImage context, message, Toast.LENGTH_SHORT) .show() |

so here the following line shows how we call the above method to start performing text recognition. Add the following line at the end of the

analyze() method. Note that you have to call imageProxy.close

once the analysis is complete on the image, otherwise the live camera feed will not be able to process further images for analysis.TextAnalyzer.kt

recognizeText(InputImage .fromBitmap(croppedBitmap, 0)).addOnCompleteListener { |

Run your app again

Now Your app will recognize the text from the camera in real-time.

you can check this by pointing your camera to any text.

If your app is not recognizing any text, try ‘resetting' the detection by pointing the camera at a blank space before pointing the camera at text.

5- Add language identification

Instantiate the ML Kit Language Identifier

MainViewModel.kt is located in the main folder. Navigate to the file and add the following field to MainViewModel.kt. This is how you get a handle to the language identifier to use in the following step.

MainViewModel.kt

private val languageIdentifier = LanguageIdentification.getClient() |

with that, you also want to be sure that the clients are properly shut down when it's no longer needed. To do this, override the onCleared() method of the ViewModel and write the following code.

MainViewModel.kt

languageIdentifier.close()translators.evictAll()

|

now replace the //TODO in the sourceLang field definition in MainViewModel.kt with the following code. This snippet calls the language identification method and assigns the result if it is not undefined ("und"). An undefined language means that the API was not able to identify the language based on the list of supported languages.

MainViewModel.kt

val result = MutableLiveData<Language>()languageIdentifier.identifyLanguage(text) } |

now run the app on your device again

Once the app will launch, it should start recognizing text from camera and identifying the text's language in real-time. Point your camera to any text to confirm.

6- Add translation

Replace the translate() function in your MainViewModel.kt with the following snippet code. This function takes the source language value, target language value, and the source text and performs the translation. Note how if the chosen target language model has not yet been downloaded onto the device, we call downloadModelIfNeeded() to do so, and then proceed with the translation.

MainViewModel.kt

private fun translate(): Task<String> {val text = sourceText.value || translating.value != false) { return Tasks.forCanceled() || text == null || text.isEmpty()) { .fromLanguageTag(source.code) .fromLanguageTag(target.code) .setValue(false) }, 15000) modelDownloadTask = translator.downloadModelIfNeeded().addOnCompleteListener { |

So here our final step also done.

Once the app loads, it should now look like the moving image below, showing the text recognition and identified language results and the translated text into the chosen language. You can choose any of the 59 languages.

thnx for your word

ReplyDelete85457C8ECB

ReplyDeletesms onay

Instagram Takipçi Kazan

Telafili Takipçi

Takipçi Fiyatları

Fatura ile Takipçi

64F687D29F

ReplyDeleteTakipçi Satın Al

Whiteout Survival Hediye Kodu

Google Yorum Satın Al

Kafa Topu Elmas Kodu

Call of Dragons Hediye Kodu